This article has a detailed interpretation of the common programming model of multi-threaded servers. The multi-threaded server in this article is a web application running on the Linux operating system. The typical single-threaded server programming model and the typical multi-threaded server threading model and inter-process communication and inter-thread communication are introduced.

Summarize a common two-threading model, summarizing the best practices of inter-process communication and thread synchronization, in order to develop multi-threaded programs in a simple and standardized way.

The "multi-threaded server" in this article refers to a proprietary web application running on a Linux operating system. The hardware platform is Intel x64 series multi-core CPU, single or dual SMP server (each machine has a total of four cores or eight cores, more than ten GB of memory), and the machines are connected by 100M or Gigabit Ethernet. This is probably the mainstream configuration of the current civilian PC server.

This article does not cover Windows systems, does not involve human-computer interface (regardless of command line or graphics); does not consider file reading and writing (except for writing to disk), does not consider database operations, does not consider web applications; does not consider low-end single core Host or embedded system, regardless of handheld devices, does not consider special network equipment, does not consider high-end "=32 nuclear Unix host; only considers TCP, does not consider UDP, does not consider other data transmission and reception except LAN Mode (such as serial parallel port, USB port, data acquisition board, real-time control, etc.).

With so many restrictions above, the basic functions of the "web application" I will talk about can be summarized as "receiving data, counting, and then sending out." In this simplified model, it seems that there is no need to use multithreading, and single threading should do a good job. The question "Why do I need to write a multi-threaded program" is easy to trigger a war of words, I put it on another blog to discuss. Allow me to assume the background of "multithreaded programming" first.

The term "server" is sometimes used to refer to a program, sometimes to a process, sometimes to hardware (whether virtual or real), and to be distinguished by context. In addition, this article does not consider the virtualization scenario. When I say "two processes are not on the same machine", it means that they are not logically running in the same operating system, although they may be physically located in the same machine. Virtual machine".

This article assumes that the reader already has the knowledge and experience of multithreaded programming. This is not an introductory tutorial.

1 process and thread"Process / process" is one of the two most important concepts in the operation (the other is a file). Roughly speaking, a process is "a program that is running in memory." The process in this article refers to the thing that the Linux operating system generates through the fork() system call, or the product of CreateProcess() under Windows, not the lightweight process in Erlang.

Each process has its own independent address space ("address in the same process" or "not in the same process" is an important decision point for system function partitioning. The Erlang book likens "process" to "human", which I feel is very good and provides us with a framework for thinking.

Everyone has their own memory, and people communicate through conversations (messages). The conversation can be either an interview (same server) or a phone call (different servers, network communication). The difference between an interview and a telephone conversation is that the interview can immediately know whether the other person is dead or not (crash, SIGCHLD), and the telephone conversation can only judge whether the other party is still alive through a periodic heartbeat.

With these metaphors, you can take "role play" when designing a distributed system. Several people in the team each play a process. The role of the person is determined by the code of the process (management, pipe distribution, management, etc.) ). Everyone has their own memories, but they don't know the memories of others. If you want to know what others think, you can only talk. (I don't think about shared memory like IPC.) Then you can think about fault tolerance (in case someone suddenly dies), expand (newcomers add in), load balancing (move a job to b), retirement (a To fix the bug, don't give him a new job, wait for him to finish the matter and restart him.) It's very convenient.

The concept of "threading" was probably only popularized after 1993. It is only a decade or more, and it is not a Unix operating system with a 40-year history. The emergence of threads has added a lot of confusion to Unix. Many C library functions (strtok(), ctime()) are not thread-safe and need to be redefined; the semantics of signal are also greatly complicated. As far as I know, the first (civilian) operating systems that support multithreaded programming are Solaris 2.2 and Windows NT 3.1, both of which were released in 1993. Then in 1995, the POSIX threads standard was established.

Threads are characterized by shared address spaces so that data can be shared efficiently. Multiple processes on a single machine can efficiently share code segments (the operating system can be mapped to the same physical memory) but cannot share data. If multiple processes share a large amount of memory, it is equivalent to writing a multi-process program as multi-threaded.

The value of "multithreading", I think, is to better exploit the performance of symmetric multiprocessing (SMP). Multithreading didn't have much value before SMP. Alan Cox said that A computer is a state machine. Threads are for people who can't program state machines. (The computer is a state machine. Threads are prepared for those who cannot write state machine programs.) If there is only one execution Unit, a CPU, then as Alan Cox said, it is most efficient to write programs according to the state machine, which is exactly the programming model shown in the next section.

2 Typical single-threaded server programming modelUNP3e has a good summary of this (Chapter 6: IO Models, Chapter 30: Client/Server Design Paradigms), which is not repeated here. As far as I know, in the high-performance network programs, the most widely used one is probably the "non-blocking IO + IO multiplexing" model, the Reactor mode, which I know is:

l lighttpd, single-threaded server. (nginx is estimated to be similar, pending)

l libevent/libev

l ACE, Poco C++ libraries (QT pending)

l Java NIO (Selector/SelectableChannel), Apache Mina, Netty (Java)

l POE (Perl)

l Twisted (Python)

In contrast, boost::asio and Windows I/O Completion Ports implement the Proactor pattern, which seems to be narrower. Of course, ACE also implements the Proactor pattern, not the table.

In the "non-blocking IO + IO multiplexing" model, the basic structure of the program is an event loop: (the code is only for the indication, not fully considered)

While (!done)

{

Int timeout_ms = max(1000, getNextTimedCallback());

Int retval = ::poll(fds, nfds, timeout_ms);

If (retval 0) {

Handling errors

} else {

Handling expired timers

If (retval 》 0) {

Handling IO events

}

}

}

Of course, select(2)/poll(2) has a lot of shortcomings. It can be replaced with epoll under Linux. Other operating systems also have corresponding high-performance alternatives (search for c10k problem).

The advantages of the Reactor model are obvious, programming is simple, and efficiency is good. Not only can network read and write be used, connection establishment (connect/accept) or even DNS resolution can be done in a non-blocking manner to improve concurrency and throughput. This is a good choice for IO-intensive applications, as is Lighttpd, whose internal fdevent structure is exquisite and worth learning. (There is no suboptimal scheme for blocking IO here.)

Of course, it's not that easy to implement a good Reactor. I haven't used the open source library. It's not recommended here.

3 Thread model of a typical multi-threaded serverThere are not many documents I can find in this regard, there are probably several kinds:

1. Create one thread per request, using a blocking IO operation. This was the recommended practice for Java networking programming before Java 1.4 introduced NIO. Unfortunately, the scalability is not good.

2. Use a thread pool and also use blocking IO operations. This is a measure of performance improvement compared to 1.

3. Use non-blocking IO + IO multiplexing. That is the way of Java NIO.

4. Advanced mode such as Leader/Follower

By default, I will use the third, non-blocking IO + one loop per thread mode.

http://pod.tst.eu/http://cvs.schmorp.de/libev/ev.pod#THREADS_AND_COROUTINES

One loop per thread

Under this model, each IO thread in the program has an event loop (or Reactor) that handles both read and write and timed events (regular or single), and the code framework is the same as in Section 2.

The benefits of this approach are:

l The number of threads is basically fixed. It can be set when the program starts, and it will not be created and destroyed frequently.

l It is very convenient to load the load between threads.

The event loop represents the main loop of the thread. To make which thread work, register the timer or IO channel (TCP connection) into the loop of that thread. Connections that require real-time performance can use a single thread; a connection with a large amount of data can monopolize one thread and distribute data processing tasks to several other threads; other secondary auxiliary connections can share one thread.

For non-trivial server programs, non-blocking IO + IO multiplexing is generally used. Each connection/acceptor is registered to a Reactor. There are multiple Reactors in the program, and each thread has at most one Reactor.

Multithreaded programs put a higher demand on Reactor, which is "thread safe." To allow a thread to stuff into the loop of another thread, the loop must be thread-safe.

Thread Pool

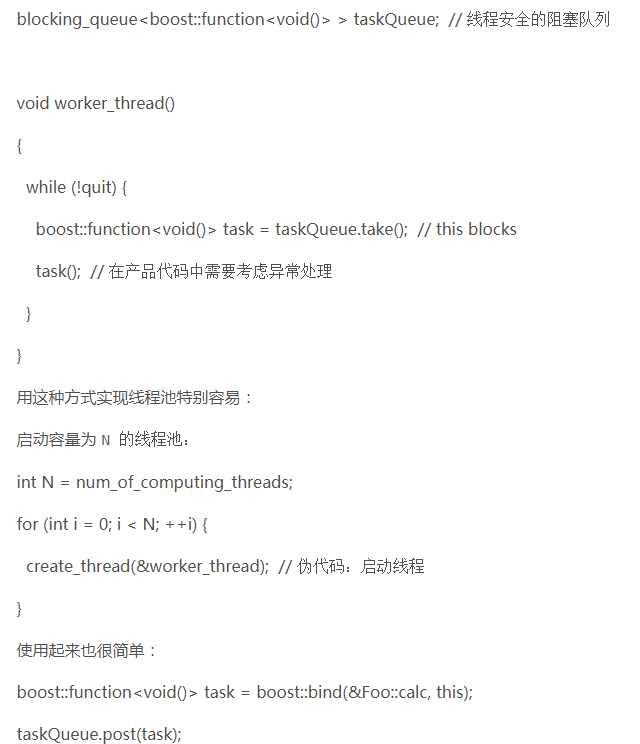

However, for threads that do not have IO light computing tasks, using event loop is a bit wasteful, and I will use a complementary solution, the task queue (TaskQueue) implemented with blocking queue:

The above ten lines of code implement a simple fixed number of thread pools, which is roughly equivalent to some kind of "configuration" of Java 5's ThreadPoolExecutor. Of course, in real projects, this code should be packaged into a class instead of using global objects. Also note the point: the lifetime of the Foo object.

In addition to the task queue, you can also use the blocking_queue "T" to implement the consumer-producer queue of data, that is, T is the data type rather than the function object, and the consumer (s) of the queue takes the data for processing. This is more specific than the task queue.

Blocking_queue "T" is a tool for multi-threaded programming. Its implementation can refer to the (Array|Linked) BlockingQueue in Java 5 util.concurrent. Usually C++ can use deque as the underlying container. The code in Java 5 is very readable, and the basic structure of the code is consistent with the textbook (1 mutex, 2 condition variables), which is much more robust. If you don't want to implement it yourself, it's better to use a ready-made library. (I have not used the free library, I will not recommend it here. Interested students can try the concurrent_queue "T" in Intel Threading Building Blocks.)

inductionTo sum up, my recommended multi-threaded server programming mode is: event loop per thread + thread pool.

l The event loop is used as a non-blocking IO and timer.

l thread pool is used for calculation, specifically task queue or consumer-producer queue.

Writing server programs in this way requires a good Reactor-based network library to support. I have only used in-house products, and I have no way to compare and recommend the common C++ network libraries on the market. Sorry.

The parameters of the loop and the size of the thread pool need to be set according to the application. The basic principle is "impedance matching", so that the CPU and IO can operate efficiently. The specific considerations will be discussed later.

There is no talk about the exit of the thread, leaving the next blog "multi-threaded programming anti-pattern" to explore.

In addition, there may be individual threads that perform special tasks, such as logging, which is basically invisible to the application, but should be included when allocating resources (CPU and IO) to avoid overestimating the system. capacity.

4 interprocess communication and inter-thread communicationThere are countless ways to communicate between processes (IPC) under Linux. Light UNPv2 lists: pipe, FIFO, POSIX message queue, shared memory, signals, and so on, not to mention Sockets. There are also many synchronization primitives, mutex, condition variable, reader-writer lock, record locking, semaphore, and so on.

How to choose? According to my personal experience, it is not expensive, and careful selection of three or four things can fully meet my work needs, and I can use it very well, and it is not easy to make mistakes.

5 interprocess communicationInterprocess communication I prefer Sockets (mainly TCP, I have not used UDP, nor Unix domain protocol), its biggest advantage is: can be cross-host, scalability. Anyway, it is multi-process. If a machine has insufficient processing power, it can naturally be handled by multiple machines. Distribute the process to multiple machines on the same LAN, and change the host:port configuration to continue using the program. Conversely, the other IPCs listed above cannot cross-machine (such as shared memory is the most efficient, but no matter how efficient it is to efficiently share the memory of two machines), limiting scalability.

In programming, TCP sockets and pipe are both file descriptors for sending and receiving byte streams, all of which can be read/write/fcntl/select/poll. The difference is that TCP is bidirectional, pipe is one-way (Linux), two-file communication between processes must have two file descriptors, which is inconvenient; and the process must have a parent-child relationship to use pipe, which limits the pipe. use. Under the communication model of sending and receiving byte streams, there is no more natural IPC than sockets/TCP. Of course, pipe also has a classic application scenario, which is used to asynchronously wake up select (or equivalent poll/epoll) calls when writing Reactor/Selector (Sun JVM does this in Linux).

The TCP port is monopolized by a process and the operating system automatically reclaims (the listening port and the established TCP socket are file descriptors, and the operating system closes all file descriptors at the end of the process). This means that even if the program quits unexpectedly, it will not leave garbage to the system. After the program restarts, it can be recovered relatively easily without restarting the operating system (this risk exists with the cross-process mutex). There is also a benefit, since the port is exclusive, it can prevent the program from starting repeatedly (the latter process can not get the port, naturally it can not work), resulting in unexpected results.

The two processes communicate via TCP. If one crashes, the operating system closes the connection, so that another process can be perceived almost immediately and can fail over quickly. Of course, the heartbeat of the application layer is also indispensable. I will talk about the design of the heartbeat protocol when I talk about the date and time of the server.

A natural benefit of the TCP protocol compared to other IPCs is "recordable reproducible", and tcpdump/Wireshark is a good helper for resolving protocol/state disputes between two processes.

In addition, if the network library has the "connection retry" function, we can not start the process in the system in a specific order, any process can be restarted separately, which is of great significance for the development of a robust distributed system.

Using TCP such byte stream communication, there will be marshal / unmarshal overhead, which requires us to choose the appropriate message format, specifically wire format. This will be the subject of my next blog, and I currently recommend Google Protocol Buffers.

Some people may say that the specific problem is specifically analyzed. If the two processes are on the same machine, they use shared memory. Otherwise, TCP is used. For example, MS SQL Server supports both communication modes. I asked, is it worthwhile to increase the complexity of the code for such a performance boost? TCP is a byte stream protocol, which can only be read sequentially, with write buffer; shared memory is a message protocol, a process fills a block of memory for the b process to read, basically the "stop" mode. To put these two methods into one program, you need to build an abstraction layer that encapsulates two IPCs. This can lead to opacity and increase the complexity of the test, and in the event of a crash in one of the communications, the state reconcile will be more troublesome than the sockets. I don't take it for me. Besides, are you willing to let tens of thousands of purchased SQL Server and your program share machine resources? The database servers in the product are often independent high-configuration servers, and generally do not run other programs that occupy resources at the same time.

TCP itself is a data stream protocol. In addition to using it directly for communication, it is also possible to build an upper layer communication protocol such as RPC/REST/SOAP on top of this document. In addition, in addition to peer-to-peer communication, application-level broadcast protocols are also very useful, making it easy to build scalable and controllable distributed systems.

This article does not specifically talk about network programming in Reactor mode. In fact, there are a lot of notable places here, such as retry connecting with back off, organizing timer with priority queue, etc., for later analysis.

6 Thread synchronizationThe four principles of thread synchronization are ranked by importance:

1. The first principle is to minimize the sharing of objects and reduce the need for synchronization. An object can be exposed without being exposed to other threads; if it is exposed, the immutable object is prioritized; it is not possible to expose the modifiable object and use synchronization measures to fully protect it.

2. Second is the use of advanced concurrent programming components, such as TaskQueue, Producer-Consumer Queue, CountDownLatch, etc.;

3. Finally, when you must use the underlying synchronization primitives, use only non-recursive mutex and condition variables, and occasionally use a read-write lock;

4. Don't write your own lock-free code, don't guess out "Which approach will perform better", such as spin lock vs. mutex.

The first two are easy to understand. Here we focus on Article 3: The use of the underlying synchronization primitives.

Mutex (mutex)

The mutex is probably the most used synchronization primitive. Roughly speaking, it protects the critical section. At most, only one thread can be active in the critical section at a time. (Note that I am talking about mutex in pthreads, not the heavyweight cross-process Mutex in Windows.) When using mutex alone, we mainly want to protect shared data. My personal principle is:

l Use the RAII method to encapsulate the four operations of creating, destroying, locking, and unlocking mutex.

l Use only non-recursive mutex (ie non-reentrant mutex).

l The lock() and unlock() functions are not called manually, and everything is responsible for the construction and destructor of the Guard object on the stack. The lifetime of the Guard object is exactly equal to the critical section (when the analysis object is destructed is a C++ programmer) Basic skills). This way we guarantee to lock and unlock in the same function, avoid locking in foo(), and then go to bar() to unlock.

l Each time you construct a Guard object, think about the locks already held along the way (on the call stack) to prevent deadlocks due to different locking sequences. Since the Guard object is an object on the stack, it is very convenient to look at the function call stack to analyze the lock.

The secondary principles are:

l Do not use cross-process mutex, inter-process communication only uses TCP sockets.

l Locked to unlock in the same thread, thread a can not go to unlock the mutex that thread b has locked. (RAII automatic guarantee)

l Don't forget to unlock. (RAII automatic guarantee)

l Do not repeat the unlock. (RAII automatic guarantee)

l If necessary, consider using PTHREAD_MUTEX_ERRORCHECK to troubleshoot

Encapsulating these operations with RAII is a common practice. This is almost a standard practice in C++. I will give specific code examples later. I believe everyone has written or used similar code. The synchronized statement in Java and the using statement in C# have a similar effect, which guarantees that the lock is valid for one scope and does not forget to unlock due to an exception.

Mutex is probably the simplest synchronization primitive. It is almost impossible to use the few principles above. I have never violated these principles myself, and problems in coding can be quickly recruited and fixed.

Run the question: non-recursive mutexTalk about my personal thought of sticking to a non-recursive mutex.

Mutex is divided into recursive and non-recursive. This is called POSIX. The other names are reentrant and non-reentrant. There is no difference between these two mutex as inter-thread synchronization tools. The only difference is that the same thread can repeatedly lock the recursive mutex, but it cannot repeatedly lock the non-recursive mutex.

The preferred non-recursive mutex is definitely not for performance, but for design intent. The performance difference between non-recursive and recursive is actually not big, because using one counter is less, the former is a little faster. Locking the non-recursive mutex multiple times in the same thread will immediately lead to a deadlock. I think this is its advantage. It helps us think about the code's expectation of locks and find problems early (in the coding phase).

There is no doubt that recursive mutex is easier to use, because you don't have to worry about a thread locking itself yourself, I guess this is why Java and Windows provide recursive mutex by default. (The intrinsic lock that comes with the Java language is reentrant, its ReentrantLock is provided in the concurrent library, and the CRITICAL_SECTION of Windows is reentrant. It seems that they do not provide a lightweight non-recursive mutex.)

Because of its convenience, recursive mutex may hide some of the problems in the code. The typical situation is that you think you can modify the object by getting a lock. I didn't expect the outer code to get the lock and modify (or read) the same object. Specific examples:

Std::vector "Foo" foos;

MutexLock mutex;

Void post(const Foo& f)

{

MutexLockGuard lock(mutex);

Foos.push_back(f);

}

Void traverse()

{

MutexLockGuard lock(mutex);

For (auto it = foos.begin(); it != foos.end(); ++it) { // Use 0x new way

It-"doit();

}

}

Post() locks and then modifies the foos object; traverse() locks and then traverses the foos array. One day, Foo::doit() indirectly calls post() (which is logically wrong), so it will be dramatic:

1. Mutex is non-recursive, so it is deadlocked.

2. Mutex is recursive. Since push_back may (but not always) cause the vector iterator to fail, the program occasionally crashes.

At this time, the superiority of non-recursive can be demonstrated: the logic errors of the program are exposed. Deadlocks are easier to debug, and the call stacks of each thread are typed out ((gdb) thread apply all bt). As long as each function is not particularly long, it is easy to see how it died. (On the other hand, the function is not allowed to be written too long.) Or you can use PTHREAD_MUTEX_ERRORCHECK to find the error all at once (provided that MutexLock has the debug option.)

The procedure is going to die anyway, it is better to die meaningfully, so that the coroner’s life is better.

If a function can be called either with a lock or with no lock, it is split into two functions:

1. With the same name as the original function, the function is locked and the second function is called instead.

2. Add the suffix WithLockHold to the function name, and lock the original function body without locking.

like this:

Void post(const Foo& f)

{

MutexLockGuard lock(mutex);

postWithLockHold(f); // Don't worry about overhead, the compiler will automatically inline

}

// This function was introduced to reflect the intent of the code author, although push_back can usually be manually inlined

Void postWithLockHold(const Foo& f)

{

Foos.push_back(f);

}

There are two possible problems (thanks to Shuimu netizen ilovecpp): a) Misuse of the locked version, deadlock. b) The unlocked version is misused and the data is corrupted.

For a), copying the previous method can be easier to troubleshoot. For b), if pthreads provide isLocked(), it can be written as:

Void postWithLockHold(const Foo& f)

{

Assert(mutex.isLocked()); // Currently just a wish

// . . .

}

In addition, the conspicuous suffix of WithLockHold also makes the misuse in the program easy to expose.

C++ doesn't have annotations. It can't be marked with @GuardedBy for methods or fields like Java, and programmers need to be careful. Although the solution here can't solve all multi-threaded errors once and for all, it can be a bit of a help.

I haven't encountered the need to use recursive mutex. I think that in the future, you can use the wrapper to switch to non-recursive mutex, the code will only be clearer.

This article only talks about the correct use of mutex itself. In C++, multithreaded programming will encounter many other race conditions. Please refer to the article "When destructor encounters multithreading - thread-safe object callback in C++"

http://blog.csdn.net/Solstice/archive/2010/01/22/5238671.aspx . Please note that the class naming here is different from that article. I now think that MutexLock and MutexLockGuard are better names.

Performance footer: Linux pthreads mutex is implemented with futex, which does not have to be locked into the system every time it is locked and unlocked, which is very efficient. The CRITICAL_SECTION for Windows is similar.

Conditional variable

Condition variable As the name suggests, one or more threads wait for a Boolean expression to be true, that is, waiting for another thread to "wake up" it. The scientific name of the condition variable is called the monitor. The Java object's built-in wait(), notify(), notifyAll() are condition variables (they are easy to use). Conditional variables have only one way to use them correctly, for the wait() side:

1. Must be used with mutex, the read and write of this Boolean expression is protected by this mutex

2. Call wait() when mutex is locked.

3. Put the judgment boolean condition and wait() into the while loop

Write the code as:

MutexLock mutex;

Condition cond(mutex);

Std::deque "int" queue;

Int dequeue()

{

MutexLockGuard lock(mutex);

While (queue.empty()) { // must use a loop; must wait () after judgment

Cond.wait(); // This step will atomically unlock mutex and enter blocking, not deadlock with enqueue

}

Assert(!queue.empty());

Int top = queue.front();

Queue.pop_front();

Return top;

}

For the signal/broadcast side:

1. It is not necessary to call signal (theoretically) if the mutex is locked.

2. Generally modify the Boolean expression before the signal

3. Modify Boolean expressions usually with mutex protection (at least as a full memory barrier)

Write the code as:

Void enqueue(int x)

{

MutexLockGuard lock(mutex);

Queue.push_back(x);

Cond.notify();

}

The above dequeue/enqueue actually implements a simple unbounded BlockingQueue.

Conditional variables are very low-level synchronization primitives that are rarely used directly. They are generally used to implement high-level synchronization measures, such as BlockingQueue or CountDownLatch.

Read-write locks and otherReader-Writer lock, read-write lock is an excellent abstraction that clearly distinguishes between read and write behavior. It should be noted that the reader lock is reentrant, and the writer lock is not reentrant (including the non-liftable reader lock). This is the main reason why I say it is "excellent".

Encounter concurrent read and write, if the conditions are right, I will use "shared_ptr to achieve thread-safe copy-on-write" http://blog.csdn.net/Solstice/archive/2008/11/22/3351751.aspx The way, without the use of read and write locks. Of course this is not absolute.

Semaphore, I have not encountered the need to use semaphores, there is no way to talk about personal experience.

To say a big deal, if the program needs to solve complex IPC problems such as "philosopher dining", I think we should first examine several designs, why there are such complicated resources to compete between threads (one thread must grab at the same time) To two resources, one resource can be contending by two threads)? Can you give the "want to eat" thing to a thread that assigns tableware to the philosophers, and then each philosopher waits for a simple condition variable, and when someone has notified him to eat? Philosophically, the solution in textbooks is equal rights. Each philosopher has his own thread and goes to chopsticks by himself. I would rather use a centralized method to use a thread to specifically manage the distribution of tableware, so that other philosophers can take a thread. No. Waiting at the entrance of the cafeteria. This does not lose much efficiency, but makes the program much simpler. Although Windows's WaitForMultipleObjects trivializes this problem, properly simulating WaitForMultipleObjects under Linux is not something that ordinary programmers should do.

Encapsulate MutexLock, MutexLockGuard, and ConditionThis section lists the code for the previous MutexLock, MutexLockGuard, and Condition classes. The first two classes are not very difficult. The latter one is a bit interesting.

MutexLock encapsulates the critical section (Critical secion), which is a simple resource class that encapsulates the creation and destruction of a mutex using the RAII trick [CCS:13]. The critical section is CRITICAL_SECTION on Windows and is reentrant; under Linux it is pthread_mutex_t, which by default is not reentrant. MutexLock is generally a data member of another class.

MutexLockGuard encapsulates the entry and exit of critical sections, ie locking and unlocking. MutexLockGuard is generally an object on the stack that has a scope just equal to the critical region.

These two classes should be able to be dictated on paper, there is not much to explain:

#include 《pthread.h》

#include "boost/noncopyable.hpp"

Class MutexLock : boost::noncopyable

{

Public:

MutexLock() // To save layout, single-line functions are not properly indented

{ pthread_mutex_init(&mutex_, NULL); }

~MutexLock()

{ pthread_mutex_destroy(&mutex_); }

Void lock() // The program is generally not actively calling

{ pthread_mutex_lock(&mutex_); }

Void unlock() // The program is generally not actively calling

{ pthread_mutex_unlock(&mutex_); }

Pthread_mutex_t* getPthreadMutex() // Called only by Condition, it is forbidden to call itself

{ return &mutex_; }

Private:

Pthread_mutex_t mutex_;

};

Class MutexLockGuard : boost::noncopyable

{

Public:

Explicit MutexLockGuard(MutexLock& mutex) : mutex_(mutex)

{ mutex_.lock(); }

~MutexLockGuard()

{ mutex_.unlock(); }

Private:

MutexLock& mutex_;

};

#define MutexLockGuard(x) static_assert(false, “missing mutex guard var nameâ€)

Note that the last line of the code defines a macro that prevents the following errors from appearing in the program:

Void doit()

{

MutexLockGuard(mutex); // Without a variable name, a temporary object is generated and destroyed immediately, without locking the critical section

// The correct way to write is MutexLockGuard lock(mutex);

// critical section

}

Here MutexLock does not provide the trylock() function, because I have not used it, I can't think of the time when the program needs to "try to lock a lock", maybe the code I wrote is too simple.

I have seen someone write MutexLockGuard as a template. I didn't do this because its template type parameter is only possible with MutexLock. There is no need to add flexibility at will, so I artificially instantiate the template. In addition, a more radical approach is to put the lock/unlock in the private area and then set the Guard to the friend of the MutexLock. I think the programmer can be told in the comments, and the code review before the check-in is also easy to find. Misuse (grep getPthreadMutex).

This code does not reach industrial strength: a) Mutex is created as a PTHREAD_MUTEX_DEFAULT type instead of the PTHREAD_MUTEX_NORMAL type we envision (actually the two are likely to be equivalent), and it is rigorous to use mutexattr to display the type of the specified mutex. b) The return value is not checked. Here you can't use assert to check the return value because assert is an empty statement in the release build. The significance of checking the return value is to prevent resource shortages such as ENOMEM, which is generally only possible in highly loaded product programs. In the event of such an error, the program must immediately clear the scene and voluntarily withdraw, otherwise it will inexplicably collapse, causing difficulties after the investigation. Here we need a non-debug assert, maybe google-glog's CHECK() is a good idea.

The above two improvements are reserved for practice.

The implementation of the Condition class is a bit interesting.

Pthreads condition variable allows you to specify a mutex at wait(), but I can't think of a reason why a condition variable will work with a different mutex. Java's intrinsic condition and Conditon class don't support this, so I think I can give up this flexibility and be honest one-on-one. Conversely, the condition_varianle of boost::thread specifies the mutex at wait, please visit the complex design of its synchronization primitive:

l Concept has four types of Lockable, TimedLockable, SharedLockable, UpgradeLockable.

l Lock 有五å…ç§ï¼š lock_guard, unique_lock, shared_lock, upgrade_lock, upgrade_to_unique_lock, scoped_try_lock.

l Mutex 有七ç§ï¼šmutex, try_mutex, timed_mutex, recursive_mutex, recursive_try_mutex, recursive_timed_mutex, shared_mutex.

æ•æˆ‘æ„šé’,è§åˆ°boost::thread è¿™æ ·å¦‚Rube Goldberg Machine ä¸€æ ·â€œçµæ´»â€çš„库我åªå¾—三æ–绕é“而行。这些class åå—ä¹Ÿå¾ˆæ— åŽ˜å¤´ï¼Œä¸ºä»€ä¹ˆä¸è€è€å®žå®žç”¨reader_writer_lock è¿™æ ·çš„é€šä¿—åå—呢?éžå¾—å¢žåŠ ç²¾ç¥žè´Ÿæ‹…ï¼Œè‡ªå·±å‘明新åå—。我ä¸æ„¿ä¸ºè¿™æ ·çš„çµæ´»æ€§ä»˜å‡ºä»£ä»·ï¼Œå®æ„¿è‡ªå·±åšå‡ 个简简å•å•çš„一看就明白的classes æ¥ç”¨ï¼Œè¿™ç§ç®€å•çš„å‡ è¡Œä»£ç çš„è½®åé€ é€ ä¹Ÿæ— å¦¨ã€‚æä¾›çµæ´»æ€§å›ºç„¶æ˜¯æœ¬äº‹ï¼Œç„¶è€Œåœ¨ä¸éœ€è¦çµæ´»æ€§çš„地方把代ç 写æ»ï¼Œæ›´éœ€è¦å¤§æ™ºæ…§ã€‚

下é¢è¿™ä¸ªCondition 简å•åœ°å°è£…了pthread cond var,用起æ¥ä¹Ÿå®¹æ˜“,è§æœ¬èŠ‚å‰é¢çš„例å。这里我用notify/notifyAll 作为函数åï¼Œå› ä¸ºsignal 有别的å«ä¹‰ï¼ŒC++ 里的signal/slot,C 里的signal handler ç‰ç‰ã€‚就别overload 这个术è¯äº†ã€‚

class Condition : boost::noncopyable

{

public:

Condition(MutexLock& mutex) : mutex_(mutex)

{ pthread_cond_init(&pcond_, NULL); }

~Condition()

{ pthread_cond_destroy(&pcond_); }

void wait()

{ pthread_cond_wait(&pcond_, mutex_.getPthreadMutex()); }

void notify()

{ pthread_cond_signal(&pcond_); }

void notifyAll()

{ pthread_cond_broadcast(&pcond_); }

private:

MutexLock& mutex_;

pthread_cond_t pcond_;

};

如果一个class è¦åŒ…å«MutexLock å’ŒCondition,请注æ„它们的声明顺åºå’Œåˆå§‹åŒ–顺åºï¼Œmutex_ 应先于condition_ æž„é€ ï¼Œå¹¶ä½œä¸ºåŽè€…çš„æž„é€ å‚数:

class CountDownLatch

{

public:

CountDownLatch(int count)

: count_(count),

mutex_(),

condition_(mutex_)

{ }

private:

int count_;

MutexLock mutex_; // 顺åºå¾ˆé‡è¦

Condition condition_;

};

请å…许我å†æ¬¡å¼ºè°ƒï¼Œè™½ç„¶æœ¬èŠ‚花了大é‡ç¯‡å¹…介ç»å¦‚何æ£ç¡®ä½¿ç”¨mutex å’Œcondition variable,但并ä¸ä»£è¡¨æˆ‘鼓励到处使用它们。这两者都是éžå¸¸åº•å±‚çš„åŒæ¥åŽŸè¯ï¼Œä¸»è¦ç”¨æ¥å®žçŽ°æ›´é«˜çº§çš„并å‘编程工具,一个多线程程åºé‡Œå¦‚果大é‡ä½¿ç”¨mutex å’Œcondition variable æ¥åŒæ¥ï¼ŒåŸºæœ¬è·Ÿç”¨é“…ç¬”åˆ€é”¯å¤§æ ‘ï¼ˆåŸå²©è¯ï¼‰æ²¡å•¥åŒºåˆ«ã€‚

线程安全的Singleton 实现

ç ”ç©¶Signleton 的线程安全实现的历å²ä½ 会å‘现很多有æ„æ€çš„事情,一度人们认为Double checked locking 是王é“,兼顾了效率与æ£ç¡®æ€§ã€‚åŽæ¥æœ‰ç¥žç‰›æŒ‡å‡ºç”±äºŽä¹±åºæ‰§è¡Œçš„å½±å“,DCL 是é ä¸ä½çš„。(这个åˆè®©æˆ‘想起了SQL 注入,åå¹´å‰ç”¨å—符串拼接出SQL è¯å¥æ˜¯Web å¼€å‘的通行åšæ³•ï¼Œç›´åˆ°æœ‰ä¸€å¤©æœ‰äººåˆ©ç”¨è¿™ä¸ªæ¼æ´žè¶ŠæƒèŽ·å¾—并修改网站数æ®ï¼Œäººä»¬æ‰å¹¡ç„¶é†’悟,赶紧修补。)Java å¼€å‘者还算幸è¿ï¼Œå¯ä»¥å€ŸåŠ©å†…部é™æ€ç±»çš„装载æ¥å®žçŽ°ã€‚C++ 就比较惨,è¦ä¹ˆæ¬¡æ¬¡é”,è¦ä¹ˆeager initializeã€æˆ–者动用memory barrier è¿™æ ·çš„å¤§æ€å™¨ï¼ˆ http://Papers/DDJ_Jul_Aug_2004_revised.pdf )。接下æ¥Java 5 修订了内å˜æ¨¡åž‹ï¼Œå¹¶å¢žå¼ºäº†volatile çš„è¯ä¹‰ï¼Œè¿™ä¸‹DCL (with volatile) åˆæ˜¯å®‰å…¨çš„了。然而C++ 的内å˜æ¨¡åž‹è¿˜åœ¨ä¿®è®¢ä¸ï¼ŒC++ çš„volatile ç›®å‰è¿˜ä¸èƒ½ï¼ˆå°†æ¥ä¹Ÿéš¾è¯´ï¼‰ä¿è¯DCL çš„æ£ç¡®æ€§ï¼ˆåªåœ¨VS2005+ 上有效)。

其实没那么麻烦,在实践ä¸ç”¨pthread once 就行:

#include 《pthread.h》

template《typename T》

class Singleton : boost::noncopyable

{

public:

static T& instance()

{

pthread_once(&ponce_, &Singleton::init);

return *value_;

}

static void init()

{

value_ = new T();

}

private:

static pthread_once_t ponce_;

static T* value_;

};

template《typename T》

pthread_once_t Singleton《T》::ponce_ = PTHREAD_ONCE_INIT;

template《typename T》

T* Singleton《T》::value_ = NULL;

上é¢è¿™ä¸ªSingleton 没有任何花哨的技巧,用pthread_once_t æ¥ä¿è¯lazy-initialization 的线程安全。使用方法也很简å•ï¼š

Foo& foo = Singleton《Foo》::instance();

当然,这个Singleton 没有考虑对象的销æ¯ï¼Œåœ¨æœåŠ¡å™¨ç¨‹åºé‡Œï¼Œè¿™ä¸æ˜¯ä¸€ä¸ªé—®é¢˜ï¼Œå› 为当程åºé€€å‡ºçš„时候自然就释放所有资æºäº†ï¼ˆå‰æ是程åºé‡Œä¸ä½¿ç”¨ä¸èƒ½ç”±æ“作系统自动关é—的资æºï¼Œæ¯”如跨进程的Mutex)。å¦å¤–,这个Singleton åªèƒ½è°ƒç”¨é»˜è®¤æž„é€ å‡½æ•°ï¼Œå¦‚æžœç”¨æˆ·æƒ³è¦æŒ‡å®šT çš„æž„é€ æ–¹å¼ï¼Œæˆ‘们å¯ä»¥ç”¨æ¨¡æ¿ç‰¹åŒ–(template specialization) 技术æ¥æ供一个定制点,这需è¦å¼•å…¥å¦ä¸€å±‚间接。

归纳l 进程间通信首选TCP sockets

l 线程åŒæ¥çš„四项原则

l 使用互斥器的æ¡ä»¶å˜é‡çš„惯用手法(idiom),关键是RAII

ç”¨å¥½è¿™å‡ æ ·ä¸œè¥¿ï¼ŒåŸºæœ¬ä¸Šèƒ½åº”ä»˜å¤šçº¿ç¨‹æœåŠ¡ç«¯å¼€å‘çš„å„ç§åœºåˆï¼Œåªæ˜¯æˆ–许有人会觉得性能没有å‘挥到æžè‡´ã€‚我认为,先把程åºå†™æ£ç¡®äº†ï¼Œå†è€ƒè™‘性能优化,这在多线程下任然æˆç«‹ã€‚让一个æ£ç¡®çš„程åºå˜å¿«ï¼Œè¿œæ¯”“让一个快的程åºå˜æ£ç¡®â€å®¹æ˜“得多。

7 æ€»ç»“åœ¨çŽ°ä»£çš„å¤šæ ¸è®¡ç®—èƒŒæ™¯ä¸‹ï¼Œçº¿ç¨‹æ˜¯ä¸å¯é¿å…的。多线程编程是一项é‡è¦çš„个人技能,ä¸èƒ½å› 为它难就本能地排斥,现在的软件开å‘比起10 å¹´20 å¹´å‰å·²ç»éš¾äº†ä¸çŸ¥é“多少å€ã€‚掌æ¡å¤šçº¿ç¨‹ç¼–程,æ‰èƒ½æ›´ç†æ™ºåœ°é€‰æ‹©ç”¨è¿˜æ˜¯ä¸ç”¨å¤šçº¿ç¨‹ï¼Œå› ä¸ºä½ èƒ½é¢„ä¼°å¤šçº¿ç¨‹å®žçŽ°çš„éš¾åº¦ä¸Žæ”¶ç›Šï¼Œåœ¨ä¸€å¼€å§‹åšå‡ºæ£ç¡®çš„选择。è¦çŸ¥é“把一个å•çº¿ç¨‹ç¨‹åºæ”¹æˆå¤šçº¿ç¨‹çš„,往往比é‡å¤´å®žçŽ°ä¸€ä¸ªå¤šçº¿ç¨‹çš„程åºæ›´éš¾ã€‚

掌æ¡åŒæ¥åŽŸè¯å’Œå®ƒä»¬çš„适用场åˆæ—¶å¤šçº¿ç¨‹ç¼–程的基本功。以我的ç»éªŒï¼Œç†Ÿç»ƒä½¿ç”¨æ–‡ä¸æ到的åŒæ¥åŽŸè¯ï¼Œå°±èƒ½æ¯”较容易地编写线程安全的程åºã€‚本文没有考虑signal 对多线程编程的影å“,Unix çš„signal 在多线程下的行为比较å¤æ‚,一般è¦é 底层的网络库(如Reactor) åŠ ä»¥å±è”½ï¼Œé¿å…干扰上层应用程åºçš„å¼€å‘。

通篇æ¥çœ‹ï¼Œâ€œæ•ˆçŽ‡â€å¹¶ä¸æ˜¯æˆ‘的主è¦è€ƒè™‘点,a) TCP ä¸æ˜¯æ•ˆçŽ‡æœ€é«˜çš„IPC,b) 我æ倡æ£ç¡®åŠ é”而ä¸æ˜¯è‡ªå·±ç¼–写lock-free 算法(使用原åæ“作除外)。在程åºçš„å¤æ‚度和性能之å‰å–得平衡,并ç»è€ƒè™‘未æ¥ä¸¤ä¸‰å¹´æ‰©å®¹çš„å¯èƒ½ï¼ˆæ— 论是CPU å˜å¿«ã€æ ¸æ•°å˜å¤šï¼Œè¿˜æ˜¯æœºå™¨æ•°é‡å¢žåŠ ,网络å‡çº§ï¼‰ã€‚下一篇“多线程编程的å模å¼â€ä¼šè€ƒå¯Ÿä¼¸ç¼©æ€§æ–¹é¢çš„常è§é”™è¯¯ï¼Œæˆ‘认为在分布å¼ç³»ç»Ÿä¸ï¼Œä¼¸ç¼©æ€§ï¼ˆscalability) 比å•æœºçš„性能优化更值得投入精力。

è¿™ç¯‡æ–‡ç« è®°å½•äº†æˆ‘ç›®å‰å¯¹å¤šçº¿ç¨‹ç¼–程的ç†è§£ï¼Œç”¨æ–‡ä¸ä»‹ç»çš„手法,我能解决自己é¢ä¸´çš„å…¨éƒ¨å¤šçº¿ç¨‹ç¼–ç¨‹ä»»åŠ¡ã€‚å¦‚æžœæ–‡ç« çš„è§‚ç‚¹ä¸Žæ‚¨ä¸åˆï¼Œæ¯”如您使用了我没有推è使用的技术或手法(共享内å˜ã€ä¿¡å·é‡ç‰ç‰ï¼‰ï¼Œåªè¦æ‚¨ç†ç”±å……åˆ†ï¼Œä½†è¡Œæ— å¦¨ã€‚

è¿™ç¯‡æ–‡ç« æœ¬æ¥è¿˜æœ‰ä¸¤èŠ‚“多线程编程的å模å¼â€ä¸Žâ€œå¤šçº¿ç¨‹çš„应用场景â€ï¼Œè€ƒè™‘到å—æ•°å·²ç»è¶…过一万了,且å¬ä¸‹å›žåˆ†è§£å§ï¼š-)

åŽæ–‡é¢„览:Sleep å模å¼æˆ‘认为sleep åªèƒ½å‡ºçŽ°åœ¨æµ‹è¯•ä»£ç ä¸ï¼Œæ¯”如写å•å…ƒæµ‹è¯•çš„时候。(涉åŠæ—¶é—´çš„å•å…ƒæµ‹è¯•ä¸é‚£ä¹ˆå¥½å†™ï¼ŒçŸçš„如一两秒钟å¯ä»¥ç”¨sleep,长的如一å°æ—¶ä¸€å¤©å¾—想其他办法,比如把算法æ出æ¥å¹¶æŠŠæ—¶é—´æ³¨å…¥è¿›åŽ»ã€‚)产å“代ç ä¸çº¿ç¨‹çš„ç‰å¾…å¯åˆ†ä¸ºä¸¤ç§ï¼šä¸€ç§æ˜¯æ— 所事事的时候(è¦ä¹ˆç‰åœ¨select/poll/epoll 上。è¦ä¹ˆç‰åœ¨condition variable 上,ç‰å¾…BlockingQueue /CountDownLatch 亦å¯å½’å…¥æ¤ç±»ï¼‰ï¼Œä¸€ç§æ˜¯ç‰ç€è¿›å…¥ä¸´ç•ŒåŒºï¼ˆç‰åœ¨mutex 上)以便继ç»å¤„ç†ã€‚在程åºçš„æ£å¸¸æ‰§è¡Œä¸ï¼Œå¦‚果需è¦ç‰å¾…一段时间,应该往event loop 里注册一个timer,然åŽåœ¨timer 的回调函数里接ç€å¹²æ´»ï¼Œå› 为线程是个ç贵的共享资æºï¼Œä¸èƒ½è½»æ˜“浪费。如果多线程的安全性和效率è¦é 代ç 主动调用sleep æ¥ä¿è¯ï¼Œè¿™æ˜¯è®¾è®¡å‡ºäº†é—®é¢˜ã€‚ç‰å¾…一个事件å‘生,æ£ç¡®çš„åšæ³•æ˜¯ç”¨select 或condition variable 或(更ç†æƒ³åœ°ï¼‰é«˜å±‚åŒæ¥å·¥å…·ã€‚当然,在GUI 编程ä¸ä¼šæœ‰ä¸»åŠ¨è®©å‡ºCPU çš„åšæ³•ï¼Œæ¯”如调用sleep(0) æ¥å®žçŽ°yield。

Insulated Power Cable,Bimetallic Crimp Lugs Cable,Pvc Copper Cable,Cable With Copper Tube Terminal

Taixing Longyi Terminals Co.,Ltd. , https://www.longyicopperterminals.com